The “Godfather of AI” Predicted I Wouldn’t Have a Job. He Was Wrong.

Earlier this month, Geoffrey Hinton, the 76-year-old “Godfather of AI,” was awarded the Nobel Prize in physics. Hinton’s seminal contributions to neural networks are credited with ushering in the explosion in artificial intelligence today, but lately he has gained a reputation as an alarmist: After more than a decade at Google, he quit last year to focus instead on warning the public about the risks posed to humanity by AI. In my own line of work, however, Hinton achieved notoriety in 2016 when he predicted that my job would be obsolete by now. I am a physician in my second year of residency, but I was a junior in college when Hinton suggested that we should stop training radiologists immediately. “It’s just completely obvious that within five years deep learning is going to do better than radiologists.… It might be 10 years, but we’ve got plenty of radiologists already.” His words were consequential. The late 2010s were filled with articles that professed the end of radiology; I know at least a few people who chose alternative careers because of these predictions. Eight years have passed, and Hinton’s prophecy clearly did not come true; deep learning can’t do what a radiologist does, and we are now facing the largest radiologist shortage in history, with imaging at some centers backlogged for months. That’s not to say Hinton was entirely wrong about the promise of AI in radiology, among other fields. But it’s become clear in my field that his hyperbolic predictions of 2016, just like those of today’s AI skeptics, are missing the much more nuanced reality of how AI will—and won’t—shape our jobs in the years to come.It takes a long time to become a radiologist. After college, there are four years of medical school, a preliminary year of general medicine, four more years of radiology residency, and then one to two years of subspecialty fellowship training. Such extensive education implies a complexity and gravity to the work that should render it unimpeachable, but which also makes the prospect of replacement by algorithm that much more unsettling. I spent over a decade studying only to lose my job to a computer? The worry is understandable, given the massive interest in applying machine learning to radiology. Of the nearly 1,000 FDA-approved AI-enabled medical devices, at least 76 percent are designed for use in radiology. There are so many papers published on machine learning that our field’s preeminent organization, the Radiological Society of North America, has a dedicated journal just for radiology and AI, with topics ranging from pediatric neuro-oncology to digital breast tomosynthesis. While most of these applications are, for now, theoretical (my workstation has only one rudimentary built-in AI package), it would be fair to say that AI is on everyone’s minds. Medical students rotating through the reading room ask whether we residents see ourselves having a job in 10 years; engineering types praise the objectivity of computer reasoning, while senior radiologists rue the lost art, the human gestalt, in medicine. Hospital administrators, computer science Ph.D.s, surgeons, and even my nonmedical friends declare that AI is either here to save the day or to “eat my lunch.” Among my peers, some predict a future in which AI lightens our loads, maximizes efficiency, decreases errors, and cements the indispensability of diagnostic radiologists. There are others who subscribe to Hinton’s view that radiologists are like “the coyote that’s already over the edge of the cliff but hasn’t yet looked down”—and who thus plan to take the highest paying job available, diligently working and saving for when the floor finally falls out. The future is framed as a battle of AI versus M.D., and we are increasingly tasked with picking a side, of casting our vote for either the humans or the machines. It doesn’t help that most coverage of this topic focuses on the accuracy of AI compared to human radiologists, emphasizing the spectacle of man against machine. Are radiologists like John Henry, banging away at the mountain as the steam engine creeps up behind us? Or will AI in radiology end up like the Segway, the Fitbit, Google Glass, Bitcoin, or Theranos—at best a nifty tool, at worst an utter sham? This is a false binary—an invitation to unwarranted despair or heedless chauvinism. The memes about the epic failures of AI are just as silly as the people, including Nobel laureates, who say we will all be replaced. From my vantage point—within the field, but only starting my journey—it seems most certain that we will chart a middle path. As Curtis Langlotz said in a mantra that bears repeating: It’s not that AI will replace radiologists, but that “radiologists who use AI will replace those who don’t.”The length of a career in radiology, and the skills imbued during our training, allow for adaptation to new technologies. A few of my faculty mentors are old enough to remember the pneumoencephalogram, a technique in which cerebrospina

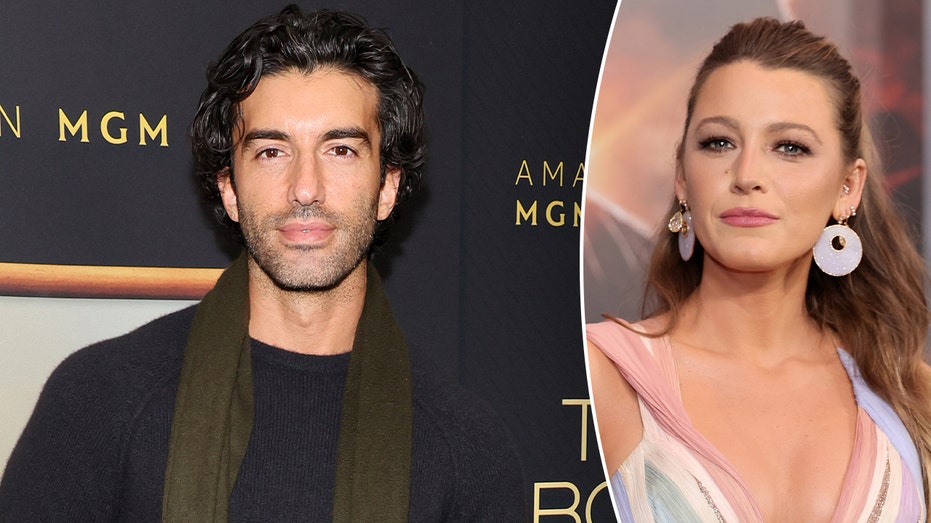

Earlier this month, Geoffrey Hinton, the 76-year-old “Godfather of AI,” was awarded the Nobel Prize in physics. Hinton’s seminal contributions to neural networks are credited with ushering in the explosion in artificial intelligence today, but lately he has gained a reputation as an alarmist: After more than a decade at Google, he quit last year to focus instead on warning the public about the risks posed to humanity by AI.

In my own line of work, however, Hinton achieved notoriety in 2016 when he predicted that my job would be obsolete by now.

I am a physician in my second year of residency, but I was a junior in college when Hinton suggested that we should stop training radiologists immediately. “It’s just completely obvious that within five years deep learning is going to do better than radiologists.… It might be 10 years, but we’ve got plenty of radiologists already.” His words were consequential. The late 2010s were filled with articles that professed the end of radiology; I know at least a few people who chose alternative careers because of these predictions.

Eight years have passed, and Hinton’s prophecy clearly did not come true; deep learning can’t do what a radiologist does, and we are now facing the largest radiologist shortage in history, with imaging at some centers backlogged for months.

That’s not to say Hinton was entirely wrong about the promise of AI in radiology, among other fields. But it’s become clear in my field that his hyperbolic predictions of 2016, just like those of today’s AI skeptics, are missing the much more nuanced reality of how AI will—and won’t—shape our jobs in the years to come.

It takes a long time to become a radiologist. After college, there are four years of medical school, a preliminary year of general medicine, four more years of radiology residency, and then one to two years of subspecialty fellowship training. Such extensive education implies a complexity and gravity to the work that should render it unimpeachable, but which also makes the prospect of replacement by algorithm that much more unsettling. I spent over a decade studying only to lose my job to a computer?

The worry is understandable, given the massive interest in applying machine learning to radiology. Of the nearly 1,000 FDA-approved AI-enabled medical devices, at least 76 percent are designed for use in radiology. There are so many papers published on machine learning that our field’s preeminent organization, the Radiological Society of North America, has a dedicated journal just for radiology and AI, with topics ranging from pediatric neuro-oncology to digital breast tomosynthesis.

While most of these applications are, for now, theoretical (my workstation has only one rudimentary built-in AI package), it would be fair to say that AI is on everyone’s minds. Medical students rotating through the reading room ask whether we residents see ourselves having a job in 10 years; engineering types praise the objectivity of computer reasoning, while senior radiologists rue the lost art, the human gestalt, in medicine. Hospital administrators, computer science Ph.D.s, surgeons, and even my nonmedical friends declare that AI is either here to save the day or to “eat my lunch.”

Among my peers, some predict a future in which AI lightens our loads, maximizes efficiency, decreases errors, and cements the indispensability of diagnostic radiologists. There are others who subscribe to Hinton’s view that radiologists are like “the coyote that’s already over the edge of the cliff but hasn’t yet looked down”—and who thus plan to take the highest paying job available, diligently working and saving for when the floor finally falls out. The future is framed as a battle of AI versus M.D., and we are increasingly tasked with picking a side, of casting our vote for either the humans or the machines.

It doesn’t help that most coverage of this topic focuses on the accuracy of AI compared to human radiologists, emphasizing the spectacle of man against machine. Are radiologists like John Henry, banging away at the mountain as the steam engine creeps up behind us? Or will AI in radiology end up like the Segway, the Fitbit, Google Glass, Bitcoin, or Theranos—at best a nifty tool, at worst an utter sham?

This is a false binary—an invitation to unwarranted despair or heedless chauvinism. The memes about the epic failures of AI are just as silly as the people, including Nobel laureates, who say we will all be replaced. From my vantage point—within the field, but only starting my journey—it seems most certain that we will chart a middle path. As Curtis Langlotz said in a mantra that bears repeating: It’s not that AI will replace radiologists, but that “radiologists who use AI will replace those who don’t.”

The length of a career in radiology, and the skills imbued during our training, allow for adaptation to new technologies. A few of my faculty mentors are old enough to remember the pneumoencephalogram, a technique in which cerebrospinal fluid was drained from around a patient’s brain and replaced with air, after which X-rays were obtained as the patient rotated in an elaborate, vaguely medieval gurney. It was only a few decades ago that the radiology department housed physical film and radiologists operated a foot pedal that rotated radiographs onto a viewbox. Now we have brain MRIs.

As a nascent radiologist, I envision a future in which algorithms perform some of the tasks we do now, but there exist other images or procedures—perhaps not yet invented—that people like me continue to perform and oversee. While AI boosters generally focus on machine learning in image interpretation, AI is also being explored for “noninterpretive tasks” like reducing imaging artifacts, shortening scanning time, and optimizing technologist workflows. It’s challenging at this point to see through the fog of hype, although I don’t doubt that AI will play a significant role in my job in a few decades. I’m reminded of futurologist Roy Amara’s aphorism: “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run.”

Still, I have reservations about AI in radiology, particularly when it comes to education. One of the main promises of AI is that it will handle the “easy” scans, freeing radiologists to concentrate on the “harder” stuff. I bristle at this forecast, since the “easy” cases are only so after we read thousands of them during our training—and for me they’re still not so easy! The only reason my mentors are able to interpret more advanced imaging is that they have an immense grounding in these fundamentals. Surely, something will be lost if we off-load this portion of training to AI, as it would if pilots turned over their “easy” flights to computers. As the neuroradiologist and blogger Ben White has pointed out, inexperienced radiologists are more likely to agree with an incorrect AI interpretation of a scan than radiologists with more experience, suggesting that in the future we will need even stronger humans in radiology, not rubber-stampers.

The problem with defeatist predictions is that they can always be updated, no matter how many times they’ve been proven demonstrably false. Last year, Hinton refreshed his timeline, predicting deep learning parity with radiologists in another 10 or 15 years. But that has hardly put to bed the specter of replacement in my field.

It’s telling that since picking on radiologists, Hinton has moved on to warnings about AI’s existential risk. If he is to be believed, artificial general intelligence will pose a threat to mankind far greater than unemployment, and would render trivial our debates over job security or plagiarism or deepfakes. I’m not in a position to disprove him, but I tend to believe that Wile E. Coyote had the right idea: Keep moving.