The Vile Sextortion and Torture Ring Where Kids Target Kids

The network, known as 764, has been responsible for horrific abuse and blackmail across the globe. Authorities and tech companies are just starting to take action.

The abuse that Ali saw was unfathomable.

Children cutting the names of their abusers into themselves, children abusing younger siblings, teenagers killing their pets—all of it was celebrated as an accomplishment.

“There was this 13-year-old girl,” said Ali, a former victim of this community, to whom VICE News is referring by a pseudonym. “On stream, she cut her entire body for them. She would do this sometimes daily. They called them cut shows. They would have her cut things into herself, and then they would allow others to tell her what to cut into herself.

“This is the same girl that one leader had convinced to kill her kittens on stream.”

If you or somebody you know are being targeted or abused by sextortionists you do have options. If you can’t reach out to a loved one or law enforcement you can reach out to the National Center for Missing & Exploited Children and/or use their Take It Down app to anonymously remove your images from most platforms.

All of this happened on Discord, the immensely popular chat app, on a server known as “Cultist.” According to Ali, the goal was “to be the most evil.” If that sounds childish, that’s because it was. The abuse consisted of children victimizing other children. The community sustains itself on a cycle of violence in which victims, after sustaining immense abuse and trauma, turn into abusers themselves—often because they see it as the only way to escape their situation.

VICE News has investigated this group for over a year and has found a sextortion ring that spans the globe and revels in the worst content imaginable. This reporting, based on information from victims, court documents, and interviews with experts, describes a community where children push other children into horrific self-harm, all for the sake of cruelty and clout—and all on some of the world’s most powerful platforms. Since being birthed half a decade or so ago, its activities, the scope of which isn’t clear even to the best-informed experts, have resulted in scores of victims and inspired several vigilantes, even as its ties to an occult neo-Nazi group and sensationalized descriptions of them have given rise to what amounts to a satanic panic.

It’s not difficult to understand why the group’s activities would inspire vigilantes and sensationalistic coverage.

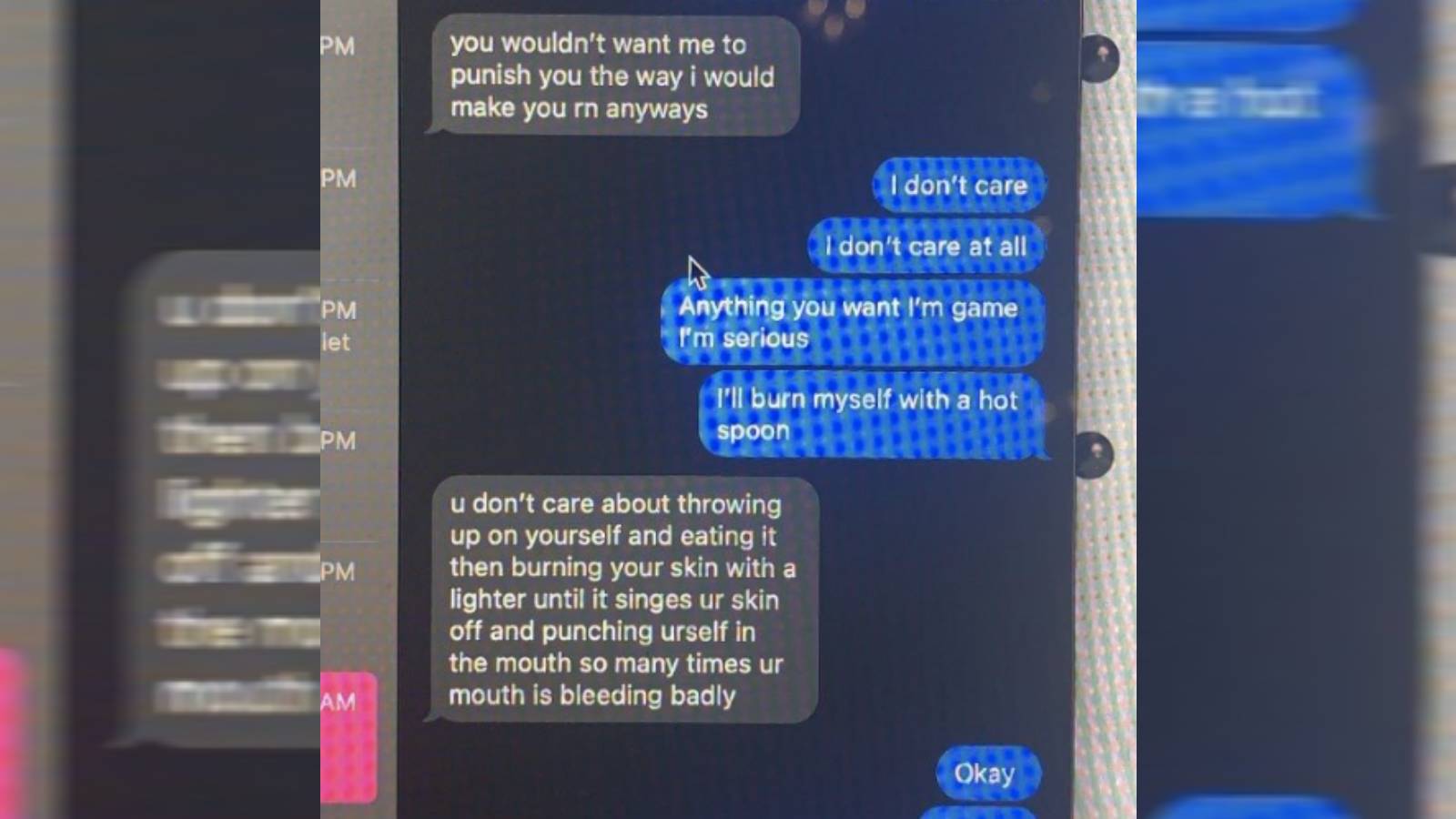

One of the most popular forms of content the predators force victims into creating is known as a “cut sign.” This is when a victim carves their abuser's name into their body. Content like this is used almost as social currency among the community—the more cut signs you have, the more power you wield. In some cases, according to victim statements and screenshots of group chats reviewed by VICE News, abusers have sold the opportunity to have their victims carve a buyer's name into their skin.

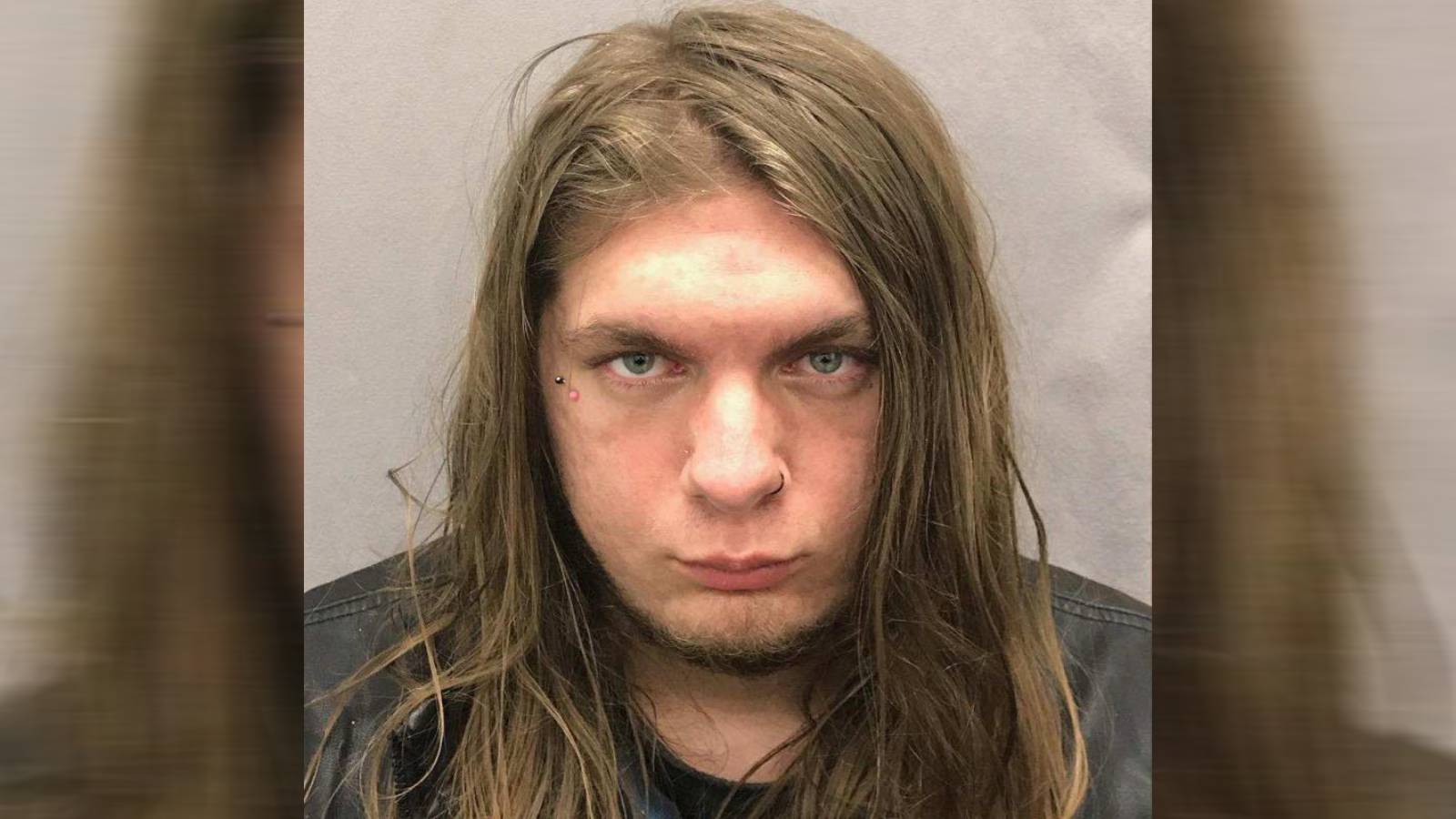

There are entire Telegram channels just sharing cut signs—which, for some in the community, are considered relatively tame. Inside Cultist there were countless images of these signs and, according to Ali, the server left scores of victims in its wake. One of the heads of the server was a young man named Kalana Limkin, who was arrested in Hawaii in December and faces charges including possession of child pornography. Limkin, who went as “vore” in the server, has yet to go to court, but if found guilty, he will likely spend the rest of his life in prison.

“On stream, she cut her entire body for them. She would do this sometimes daily. They called them cut shows. They would have her cut things into herself…”

Ali, alongside other victims, spent months gathering information on Limkin, which they then provided to the FBI. This likely led to his arrest.

Since Limkin’s arrest Cultist has, for the first time in years, gone quiet. But it was just one part of an online ecosystem I will, for the sake of this article, be calling 764.

‘The absolute worst, most vile form of human taboo’

764, strictly speaking, isn’t a site, or a place, or a group. It’s a network of online communities using several social media platforms (primarily Telegram, where the recruitment and spread of content occur, and Discord, where the actual abuse and grooming take place), with its own lingo, celebrities (typically the abusers), and hierarchy. There are multiple factions within the community, each headed by distinct leaders.

“There are all types of people,” said Ali, who spent months being abused by a cult leader in Cultist who threatened to send explicit images of her to her family. After getting out she decided to fight back and aid others in getting out of these groups as well as collect information on the perpetrator. She reached out to VICE News after hearing her abuser was arrested in December. “There were a lot of predators, and there were a lot of victims. There was this mindset that they were trying to get new girls. They even groomed some of the current girls to try and recruit new girls to be groomed.

“If you could get a girl that was ungroomed and then groom her for the first time, that gave you status,” she added. “To have a girl that you're grooming, that 'it's her first time,’ was the goal for a lot of them.”

The engine behind this nightmare factory is sextortion, a form of blackmail that’s becoming increasingly prevalent. In the broadest sense, it refers to a predator convincing a victim to send sexually explicit images of themselves, and then using that to bend the victim to their will—this most commonly involves money but can, for instance, involve threatening to send pictures to the victim’s parents if they don’t cut themselves. In many cases, these demands involve the creation of child sexual abuse material, or CSAM, and “hurtcore” content, which focuses on the non-simulated infliction of pain and humiliation on children, typically toddlers.

Coverage of 764 has often focused on supposed ties to the occult. This isn’t out of nowhere; Limkin and other people who have been arrested in connection to 764 have had connections to the Order of Nine Angles, an esoteric satanic group connected to neo-Nazism. While it’s true there exists some relationship, that relationship is thinner than it's been made out to be. At the end of the day, these are children and pedophiles hurting children.

‘To have a girl that you're grooming, that 'it's her first time,’ was the goal for a lot of them.’

While it’s impossible to determine exactly how widespread the issue is, researchers who have investigated 764 for years tell VICE News they estimate the number of victims of organized sextortion groups to be in the hundreds. Collectively the members of 764 boast that they have left thousands of children traumatized; this is almost certainly not the case. As in many other online communities, clout is the ultimate goal of 764, and to be on top, you need to be the scariest, the baddest person. Exaggerations of both the depravity of the group and the number of its victims need not be taken at face value—the truth is bad enough.

What can be said without a shadow of a doubt is that the group exists and that law enforcement is taking it seriously. Over the past two years, several major players in the world of 764 have been arrested, and police are starting to recognize it as—according to court documents describing one member’s arrest—a “network that targets minor victims online, grooming and coercing them through threats, violence, blackmail and extortion to engage in destructive behavior, including producing child sexual abuse material (‘CSAM’) and engaging in self-mutilation, as well as violent acts against others.”

VICE News has identified multiple 764-affiliated groups, but will not be naming them, both to avoid promoting them and because names are somewhat beside the point. The names for these factions are plentiful and similar, typically an arrangement of three or four numbers, or “sinister” words at times spelled using l33t (a modified form of English found online that replaces letters with numbers.) They exist on multiple social media platforms at once, and it's not unheard of for them to change their names in the hopes of beating moderation. While there may be surface-level changes among the groups, their modus operandi remains the same and in many cases, they share overlapping membership.

Ali, who was inside of the Cultist server, explained the layout. Alongside the normal channels one would find on Discord, like one meant to welcome new members and one for general discussion, there was a “nudes” channel that contained sexual images Limkin was sent—almost all of which came from minors—and a “cut signs” channel. She said all of this was publically available to anyone who joined the channel. The group had a bot that was programmed to see the self-reported age and gender of a new initiate to the group. If they met the criteria they would be marked as “groomable.”

Several factions exist within the 764 ecosystem and, according to Ali, there exists a sort of rivalry between the leaders of the communities. The only way to win is to push your victims to the very limit.

“There were animal abuse and sexual acts with animals, and there were also a few cases where they would (force) people who had younger siblings to either do something to the younger sibling or things like that,” said Ali, who added that one of the group’s goals was to drive a victim to suicide. “That's what [Limkin] would have liked to be, but he wasn't quite able to get it up to that point.”

Matthew Kriner, the director of intelligence at Middlebury University’s Center on Terrorism, Extremism, and Counterterrorism, told VICE News that 764 “peddles in the absolute worst, most vile form of human taboo, with the very strategic intention of using that to harm others and then perpetuate its existence.”

VICE News has identified at least five people who have been arrested in the United States in connection to the group, but because the perpetrators are so young, researchers believe there may be more arrests hidden behind publication bans worldwide. All were arrested within the last five years, and their crimes are all connected to luring minors and child pornography.

“There were also a few cases where they would (force) people who had younger siblings to either do something to the younger sibling…”

The group chats, which take place on both Telegram and Discord, are large, unruly things that are hard to keep track of. Almost all of the grooming typically happens in direct messages, where predators use a variety of tactics including love bombing or tricking a victim with photos of a minor they had previously sextorted. The group recruits from a wide array of sources, which include traditional social media platforms as well as games like Minecraft and Roblox.

One of the primary areas where members focus attention are LGBTQ communities and ones devoted to topics like eating disorders and self-harm; they perceive these communities as vulnerable and more susceptible to their manipulation tactics.

“[The victims] do not have the typical life,” said Ali. “There's a lot of them that have been abused, either physically or sexually. There's a high rate of sexually abused girls in there.”

A young woman who we will be calling Jessie in this story told VICE News she first learned of 764 groups in a community for anorexia. Jessie says she was the victim of a high-profile member of one 764 faction, and that her content is still being shared. Her abuser has not yet been arrested.

However they find a victim, the predator will, at a certain point, have the minor send them sexual images. A typical sextortionist asks for money when they’ve received this content—financial sextortion against young boys makes up the majority of the crimes—but not those in this group. These predators will ask the victim to make content for them, which can range from cut signs to killing a family pet to abusing a younger sibling.

According to court documents, and the testimony of those who have been inside the groups, the victims are almost all children. The perpetrators likewise skew young—a 17-year-old in Texas was just sentenced to 80 years in prison for child pornography crimes committed within the group—but there are certainly some older people in the group. Richard Densmore, a veteran in his 40s, was arrested in late January in connection to Limkin’s group. He faces charges in connection to possessing and coercing a minor into making CSAM on a live stream, and court documents allege young girls cut allusions to Densmore into their skin. Several sources have told VICE News that the Discord server Densmore ran was connected to 764—a claim corroborated by court documents. One mentioned Densmore by name before he was arrested.

Through his lawyer, Densmore offered VICE News no comment but pointed toward his not-guilty plea.

While the predators find their victims in communities across the internet, their primary home is on Telegram and Discord where they constantly play a whack-a-mole game with moderators; as one server is taken down, they’ll hop to another platform, organize another server, and hop back.

The way that 764 collects and distributes the content it creates—it creates online locations to host images and personal information of their victims in things they’ve dubbed “lorebooks”—makes it easy for someone who has been tormented by the group to be revictimized. Some of those in the community are revictimized by multiple predators. Ali said that some get to a place where they believe this is the only way they can maintain a “relationship dynamic and that is why it's so hard for a lot of the victims to get out of.”

For some, the only way to escape the abuse that was being inflicted on them was to inflict abuse on others.

You Can Get Help

During the worst days of her abuse, Jessie wouldn’t leave her room.

“I didn't eat anything. I didn't drink anything,” she told VICE News. “I was vomiting nothing but stomach bile and crawling to my fridge to get food. I was very much not OK. It's extremely traumatizing.”

This is a common dynamic among victims of these gangs; to be one is an intensely isolating experience, and several told VICE News they felt like they were trapped and had no options. Kathryn Rifenbark, the director of survivor services at the National Center for Missing & Exploited Children, or NCMEC, told VICE News that it’s important for victims to know there are options available to them. If they feel they can’t reach out to a loved one in their life, they can reach out to an organization like NCMEC.

“So many children feel a lack of hope and feel a lot of despair in those moments and we have seen children that have taken their own lives due to the distress that these situations cause them,” said Rifenbark. “So it's very important that they identify someone that they can reach out to to help them and know that they are not alone. We help many children every single day, so they're not alone and there is help out there for them.”

There are also tools like Take It Down (operated by NCMEC), which youths can anonymously use to aid them in removing content that is being used to abuse them. The tool works by issuing a unique digital fingerprint to content, which platforms can use to track the image and take it down. This can be done without informing a parent or law enforcement or giving any sort of personal information.

‘So many children feel a lack of hope and feel a lot of despair in those moments and we have seen children that have taken their own lives…’

Some of the victims that VICE News spoke to about 764 found themselves going back into the communities to see if their content continued to be shared. Jessie told VICE News she knows that her former abuser is using her images to catfish minors into his sextortion ring and that she is in a continuous battle to counter it.

“The stuff that ends up on my phone by joining these groups to try and see where he's sending my nudes and when I see these minors being like harmed … It's a lot on me,” Jessie said. “I'm angry. I'm very angry and I'm upset and I feel like it's like my duty in life right now to do anything I can to get him in trouble.”

Stephen Sauer, the director of CyberTip.ca—a Canadian tipline focused on sextortion and giving aid to children in dangerous situations—told VICE News that many people who have dealt with the trauma caused by these crimes constantly worry about their content remerging.

“That comes as a long-term issue because they're constantly worried about being recognized,” said Sauer. “We have some kids who are and young adults actually who were victimized as children that are constantly searching for their abuse material online to get it removed.”

Oftentimes, the predators threaten victims’ families, especially if they can coerce personal information out of their victims.

“When there are threats involving your family, because it feels like your actions that you've taken have now affected the people around you, that's like a really scary thing,” said Ali. “It feels easier to comply than to tell somebody about it, especially if they're threatening you if you tell someone about it. But it's just another way to scare you into continuing.”

If you have any knowledge about 764 sextortion groups or the Order of Nine Angles, please feel free to reach out to Mack Lamoureux and VICE News in confidence at mack.lamoureux@VICE.com.

Like any enterprising online community looking for members, the group finds its victims in an array of places. A GNET piece written by Marc-André Argentino, Barrett Gay, and M.B. Tyler called “764: The Intersection of Terrorism, Violent Extremism, and Child Sexual Exploitation” is the best piece of literature publicly available about the group. In it, the authors write that 764 has a “presence on YouTube, Instagram, Discord, Snapchat, X (formerly Twitter), Telegram, Twitch, TikTok, Steam, Mega, and Roblox,” which they use for both promotion and recruitment. What’s more, the group is sharing tips on how to snare young people into its web.

“People don't understand how quickly some of these communications can happen,” Sauer told VICE News. “I think there's a lot to be said about the fact that the offending community out there has kind of come together and are educating one another.”

When reached for comment, Meta removed the accounts flagged by VICE News as examples of 764 affiliated profiles and stated they’re aware of the problem and are actively investigating it in tandem with NCMEC. Spokespeople for Mega and Snapchat told VICE News they have a zero-tolerance policy for sextortion and have taken several steps to address the problem.

“The stuff that ends up on my phone by joining these groups to try and see where he's sending my nudes and when I see these minors being like harmed, it's a lot…”

Jessie said even after she removed herself from the abuse at times she would be targeted again. In some cases her former abuser even contacted her current partner to brag about what he did to her. But now, since she’s spoken to people and gotten help, he doesn’t wield the same power he once did.

“He’s unsettling, very unsettling. There is something wrong with him. It's like there's like a light in his head that's actually missing,” Jessie told VICE News. “But I’m not scared of him, like at all.”

A growing problem

One of the most popular apps among teenagers—Discord—is, for many in 764, the primary grooming and abusing grounds.

A Discord spokesperson told VICE News the company is aware of the 764 threat and has partnered with law enforcement and researchers regarding it. They called the threat “significant” and said that anything they encounter they report to NCMEC and law enforcement. They did not share any hard numbers about the amount of groups the company has taken down or accounts it’s banned, but said Discord has been attempting to proactively detect the group. Working with NCMEC, they use hashes generated by their Take It Down program to track CSAM and identify groups sharing it.

"We find that they organize on more encrypted platforms and attempt to share out [information about a new server] when they do get back up and running on Discord before we take them down again,” said a Discord spokesperson.

When asked if they were working with other platforms to combat 764, the spokesperson declined to get into details but added they were taking an “ecosystem-based approach.”

According to Discord’s self-reporting, the number of accounts it has removed from the platform in connection to “child safety” is exploding. On its transparency page, the company states it removed 37,000 accounts for child safety in the last quarter of 2022; that number exploded to 116,000 in the final quarter of 2023.

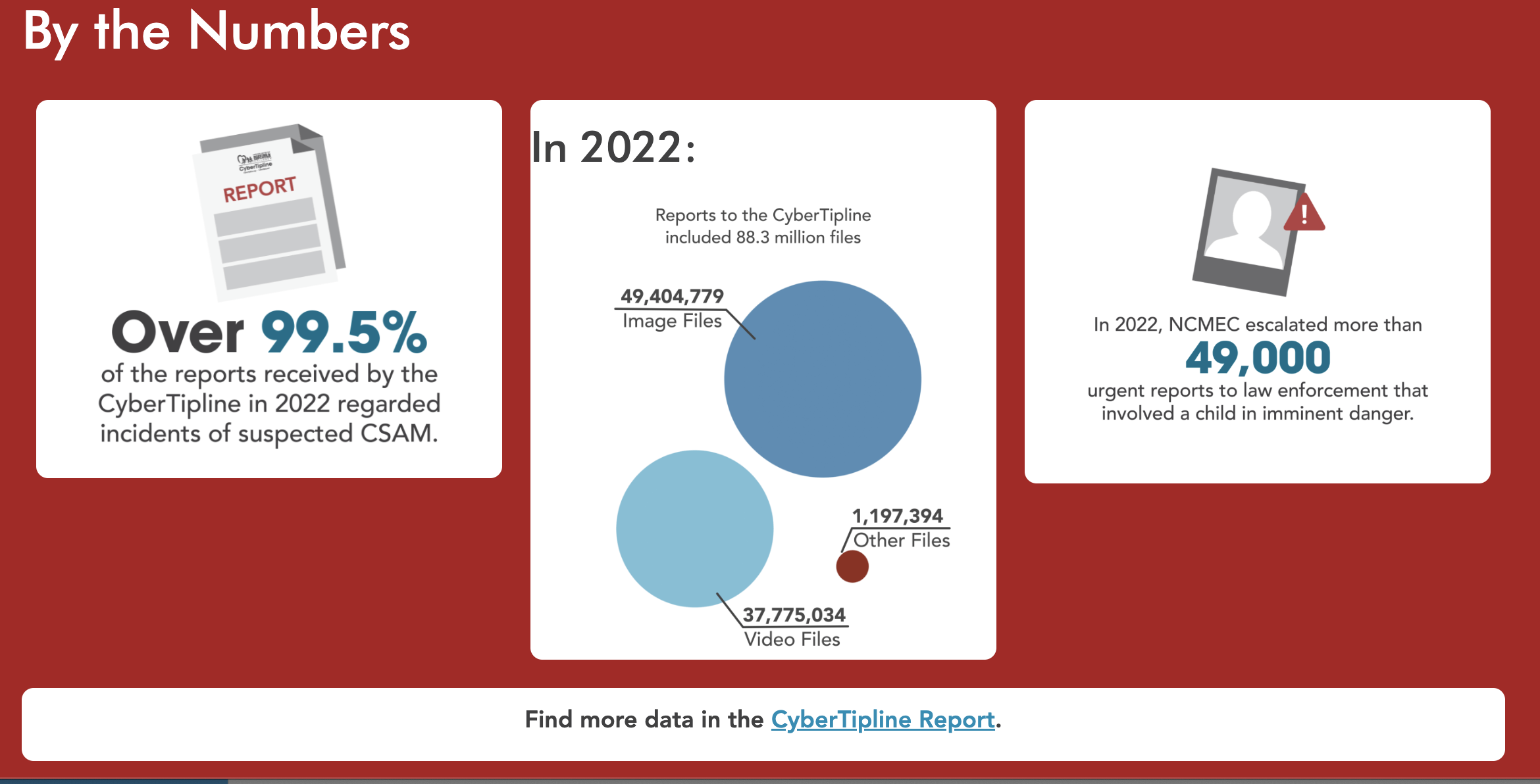

The FBI declined to comment on the issue but did point me to a briefing about the threat of sextortion. According to the FBI, the majority of extortion it’s seeing is financial and primarily comes from overseas threats. In the briefing, the FBI said that from October 2021 to March 2023 it received over 13,000 reports of sextortion of minors, which led to “at least 20 suicides.” The problem appears to be accelerating at a dangerous rate—from October 2022 to March 2022 the FBI saw a 20 percent increase in reporting. This data is consistent with NCMEC’s; the group says that between 2021 and 2023 the number of online enticement reports they received on its tip line increased by 323 percent

All of these numbers should be taken more as directional than definitive. It’s unclear to what extent rising numbers reflect more reporting and enforcement, as opposed to more abuse; law enforcement sources pointed out to VICE News that factors such as a platform rolling out a reporting tool in a new language or new country can lead to massive spike in reports without any detectable increase in prohibited activity. But it does appear the problem is getting worse.

‘Child pornography is probably (his) least egregious crime’

Last year, 17-year-old Bradley Cadenhead was sentenced to life in prison after pleading guilty to nine counts including possessing and promoting child pornography. Speaking about the content found in Cadenhead’s possession, one officer who worked on the case told a local news outlet, “The child pornography is probably the least egregious crime he has committed; he is a scary kid.”

Cadenhead was only 16 when he was arrested. His case can give us a possible hint at the orgins of 764.

According to court documents obtained by VICE News, Cadenhead said that he first became obsessed with violent pictures and videos at age 10, and that this fascination grew as he aged. Eventually, he met a person on Minecraft with whom he cultivated this fascination. Then, as the court documents state, he started “a group called 764." In the online world he created, Cadenhead’s influence over the members of his server was “extreme” and, according to the documents, he considered himself a cult leader.

Before him, there was a sextortion group called CVLT, which became infamous when Kaleb Merritt, one of its members, traveled across multiple states to find one of his online victims, a 12-year-old girl, in real life and rape her multiple times. Merritt, then 21, met his victim on Instagram and began to abuse her online before traveling from Spring County, Texas to Bassett, Georgia to find her in person. According to court documents, Merritt camped out behind his victim's home for several days, coercing her into meeting up with him in his tent where he then raped her and took photos of her nude. Merritt was eventually sentenced to 350 years in 2022 for his crimes.

Limkin’s court documents refer to 764 and CVLT, the groups Cadenhead and Merrit were in.

“Limkin was an associate of the groups known as ‘CVLT’ and ‘764’ and identified as the founder of the splinter group, ‘Cultist’, which focused on more specific behaviors, such as promoting child pornography, child exploitation, sexual extortion, and trafficking, doxing, swatting, ‘fed’ing,’ manipulation, animal cruelty and self-harm of minors,” read his documents.

Now, this isn't a problem just localized to North America. Both the perpetrators and victims can be found across the globe. Some of the most shocking crimes tied to this group can be found overseas.

In late January, Brazilian police announced they arrested two teenagers in “Operation Discord,” and while the reports don’t specifically name 764, the modus operandi is the same. The teenagers were at the center of a sextortion ring that was built around “coercing children and teenagers to send intimate videos; practiced self-harm with administrators' usernames and a swastika design; and committed animal abuse.” One of the teenagers was caught with CSAM on their mobile phone.

In 2022, a German teenager who was living with a Romanian foster family attacked a 74-year-old woman, beat her to the ground, and cut her throat. He filmed the attack and shared it online. Just days before it the teenager had attacked another senior citizen pushing him down a flight of stairs and cutting his face. That elderly man lived.

The crime was investigated by the Romanian outlet Libertatea and German publication Der Spiegel, which reported the teen’s indictment said he committed the attack “to provide content with a high degree of violence within the online groups he was part of.” When investigators searched his computer, they found CSAM and content with extreme violence. A journalist covering the case spotted several faded tattoos on the young man's body at a court hearing, including a swastika, the username of a well-known leader in the group, and, written on his forearm in faded ink, three letters.

“764.”

Traps and propaganda

Reporting on a community like 764 is exceptionally difficult, not just because it involves extremely unsettling material but because it involves CSAM that is, with incredibly narrow exceptions, categorically illegal to view or possess. This means that not only are journalists highly limited in their ability to investigate, but so are most of the sources on whom they normally rely, such as academics, activists, independent researchers, and even all but the most specialized law enforcement officials.

Things are even more restrictive in Canada, where I am located than in the U.S.; child pornography is defined here not simply as the vile material one thinks of initially, but also as any sort of visual or written material that describes, advocates for, or “counsels sexual activity with a person under the age of eighteen years.”

“I’m guessing you need these,” one source I worked with said after dropping off screenshots corroborating some information, “because you, for whatever reason, don’t want to or can’t check them yourself.” He was correct.

Reporting on this group, then, meant circumnavigating the extremities of this extremist community. There are many areas that I would not and could not investigate myself, because it would put me at risk of engaging with illegal material. I had to rely on specialist researchers, infiltrators, and abuse survivors to walk me into the legal sides of the community and provide me with screenshots and video clips showing the inner workings of the sextortion rings.

Despite these difficulties, the group has proven to be a bona fide hit for some in the true-crime world. How could a neo-Nazi satanic cult that’s grooming children not be?

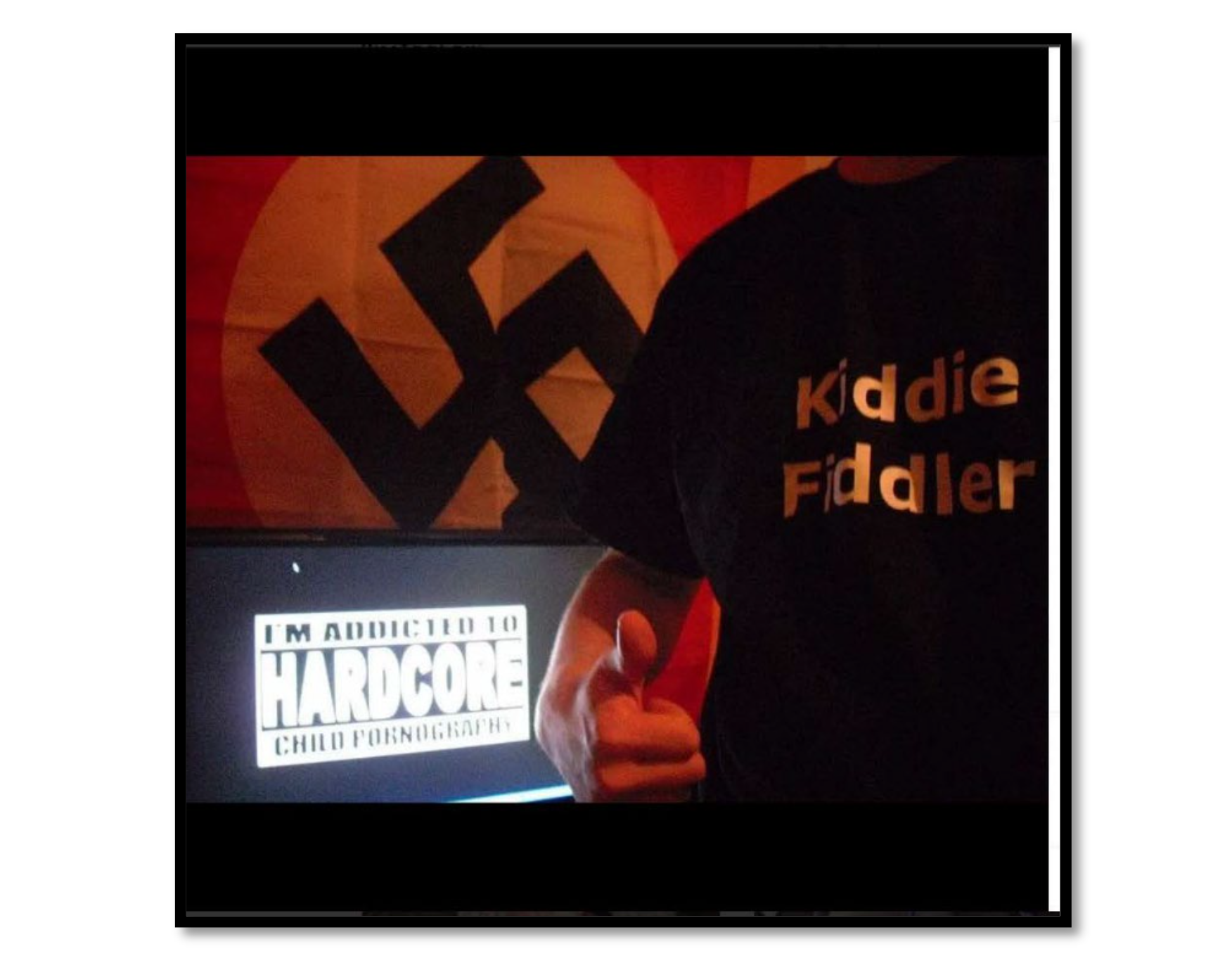

What is driving the actual acts, though, is far more murky than you’ll ever learn in a minute-long TikTok video warning you to never let your children use Discord. Evidence that VICE News gathered and researchers who spoke to VICE News suggest that while the group has certainly adopted aesthetics from the Order of Nine Angles, a fascist occult group focused on destabilizing society, and there are certainly some elements within it, this is not what is driving the crimes.

‘It wasn’t ever like ‘you’re doing this for Satan’ or even brought up.’

O9A has been the focus of researchers and law enforcement for years now, and it has been tied to multiple crimes, including many involving CSAM. At the risk of simplifying a complicated group, the goal of O9A is to weaken society by attacking taboos and norms, and few things are more taboo than CSAM. To push their goal they infiltrate other groups and try to distort them to their own end. The group's influence could be prominently found in neo-Nazi accelerationist groups like Atomwaffen and The Base.

Researchers believe there is a strong chance that the origins of 764 were tied to the O9A, but they've since grown apart. Both Ali and Jessie told VICE News that while they saw satanic imagery, that’s as far as it ever went.

"It wasn't a satanic cult,” said Ali. “There wasn't really a satanic aspect in it. There are a few people who are Satanists, but it was more just like a group of hatred and child stuff.

“It wasn’t ever like ‘you’re doing this for Satan’ or even brought up.’”

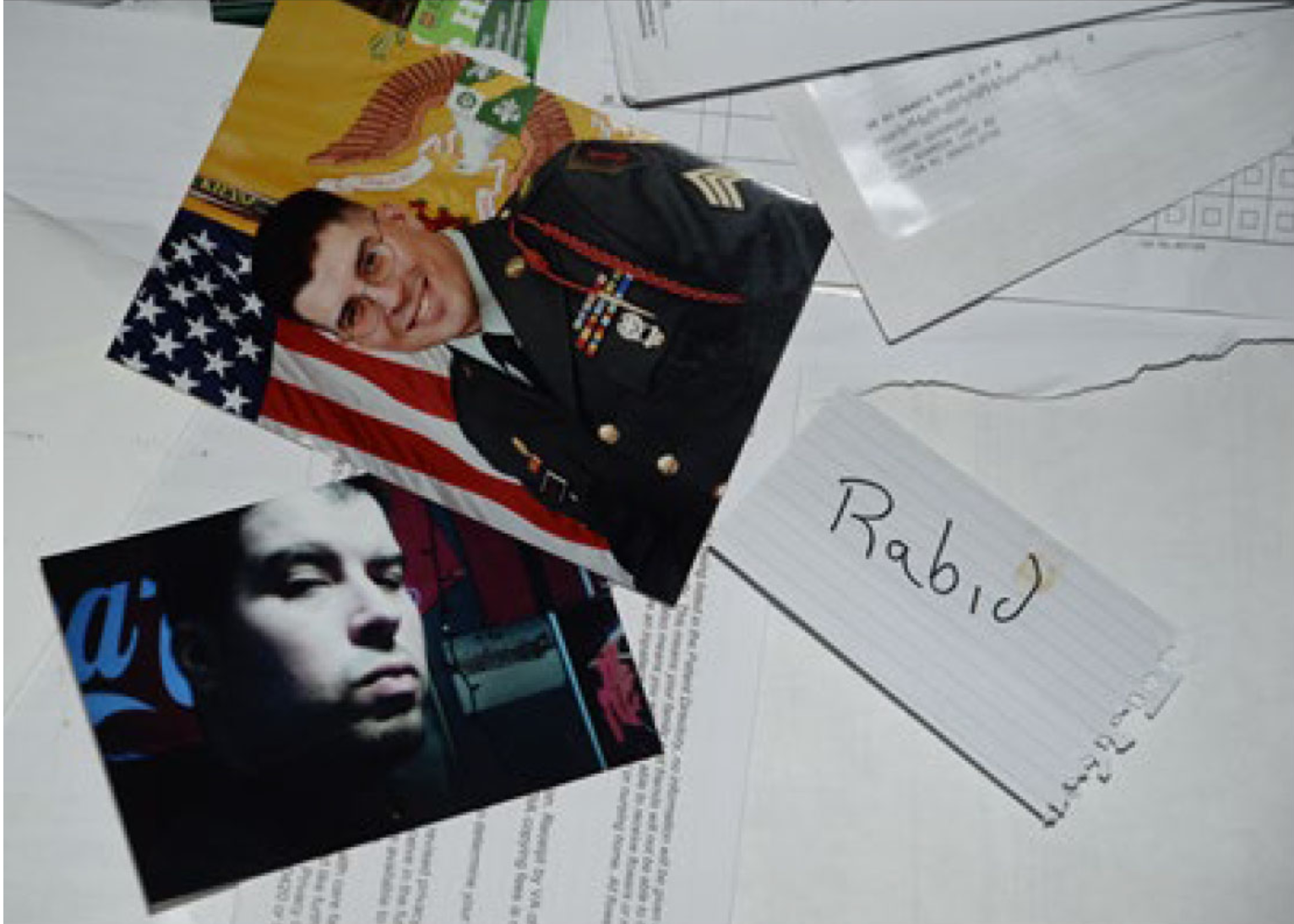

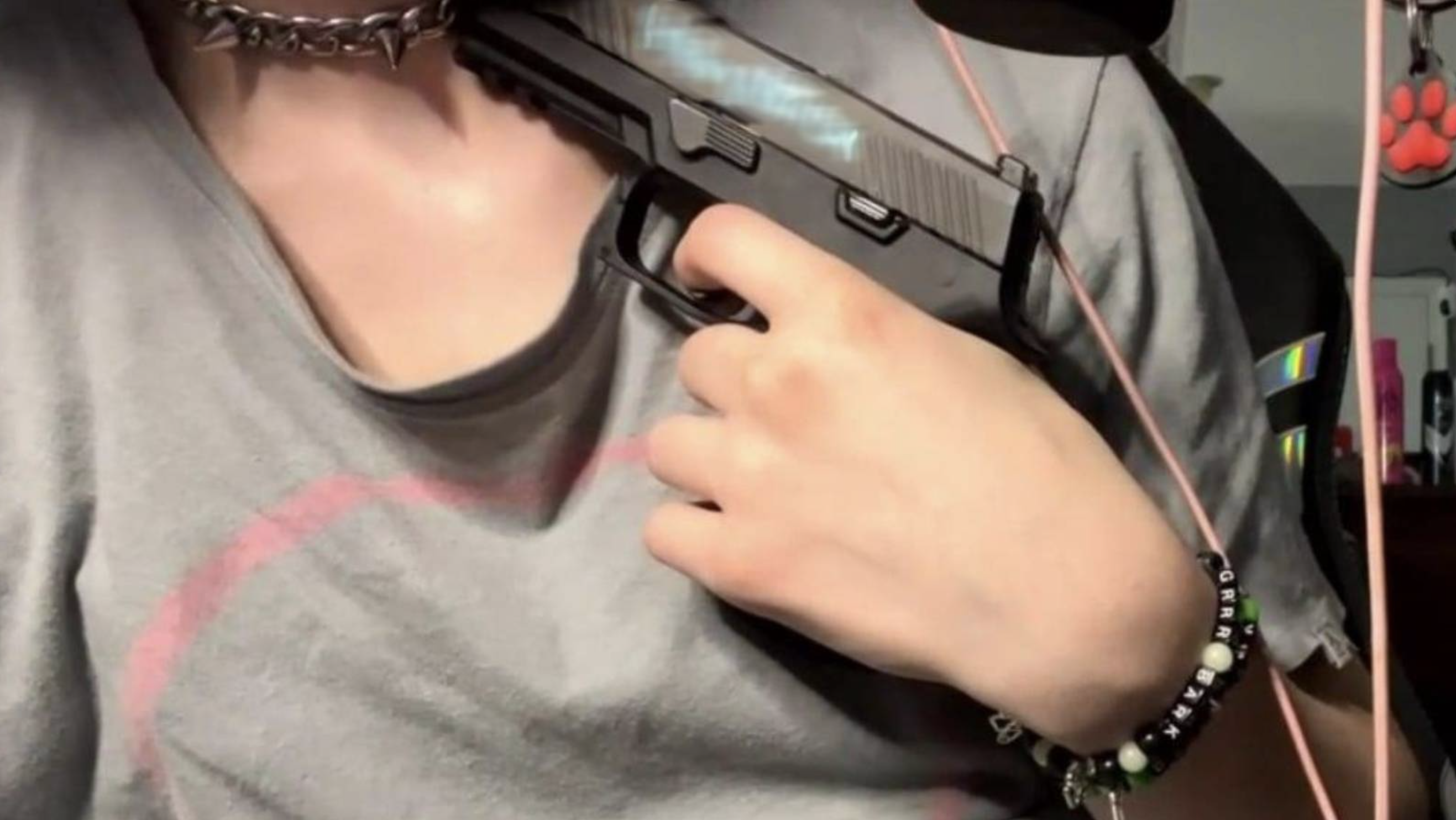

As always with 764, the truth is murky, because the claims of connections to the O9A aren't without merit. Angel Almeida, a member of the 764 collective who was arrested in 2021, is its most obvious link to O9A. Almeida, like the rest of those arrested in connection to the group, is facing a plethora of child pornography charges in New York.

“The defendant attempted to incite and spread violence—sharing images of himself brandishing a firearm, of children being bound and raped, and of animals being stabbed and beaten,” reads the court response related to Almeida’s motion to suppress evidence. “He targeted minors online, including Jane Doe-1 and Jane Doe-2, desensitizing them to violence by distributing CSAM and, in the case of Jane Doe-2, inundating her with 764 doctrines. And he coerced these victims into producing pornographic content of themselves. With Jane Doe-2, the defendant’s coercion went so far as to hold her at gunpoint, drink her blood, and engage in criminal sexual acts.”

It was found that upon Almeida’s arrest, he had multiple physical objects connected to O9A, including patches, a flag, books, and a piece of paper with either his or one of his victim's blood on it, seemingly connected to an occult O9A ritual.

764 users have also tied themselves to other esoteric groups that are on the radar of terrorism researchers. This includes sharing neo-Nazi manifestos within their community and promoting mass killings. One Eastern European group in particular, which VICE News will not be naming, has declared a “partnership” with the 764 community.

Because of the extreme crimes and its portrayal of itself as a “global satanic pedophile cult,” 764 has been the focus of some citizen journalists in the true-crime realm on platforms such as YouTube and TikTok. Researchers worry they’re sharing propaganda of the cult and falling into some of the traps the group sets to entice victims into their webs.

In many cases, the crumbs that have been left online were left specifically by the group in the hopes of enticing people to search them out or making them out to be something they’re not. These traps include Reddit posts written by victims, which is one I fell for, things written on Urban Dictionary, posts made on TikTok, and so on.

Barret Gay and M.B. Tayler, two of the authors of the GNET piece about 764, told VICE News that the abusers within 764 want to be portrayed as an all-powerful cult, which elevates the group into something larger than it is. Sensationalistic and overly simplistic coverage of the groups, say experts, plays into its hands.

“People trying to do vigilante-style coverage actually may end up helping these perpetrators more than it would be hurting them,” said Tayler. “Covering this like ‘look at this spooky creepy scary thing weird thing we found on the internet’ isn’t helping anyone.

"They're just spreading propaganda without meaning to,” he added. “They're doing more harm than good."

Cyber vigilantism

The shocking and extreme nature of the crimes has unsurprisingly attracted some who want to fight back.

In the spring of 2023, Tar, a man who infiltrated the groups, first got in touch with me. He told me that I had played right into a trap laid for 764 on Reddit. A 764 abuser had posed as a victim warning others not to look into 764—the hope was this would pique a macabre interest in the group and send possible victims looking. A screenshot of my request to speak to the fake poster was shared among the group.

Tar runs a group, which VICE promised not to name, that actively tries to hunt down the groomers and turn the tables on them. What this group and others like it do is oftentimes illegal. Some of the hunters will repost sextortion content, which means they are sharing CSAM—a clearly illegal act. Tar and his group knew what they did was skirting the legal system and didn’t have any qualms about it. They believed they were on the moral side of the fight.

“At the end of the day, I think these groups need to be brought to light because the average Joe Schmo doesn't realize how much information their child is leaving, and sometimes it's not even a child, it could be the parents,” Tar said. “They don't realize what kind of digital footprint that they're leaving and that's what these guys are praying on.”

Cyber vigilantism is as old as the internet, and while many of those who partake in online “pedo-hunting” likely believe they’re helping, it is something that experts don’t recommend. This kind of action can bring vigilantes into contact with illegal material, make them susceptible to becoming victims themselves, and interfere with and possibly ruin law enforcement investigations.

"The laws that are in place really need to be in place,” said Sauer. “That's one of the reasons why an organization like ours is important because we do have a lens into what's happening in all these online spaces. Even if it's a limited lens we can provide that type of information without overexposing.”

Tar and his group are far from the only community that is attempting to push back or shine a light on 764. There are a plethora of “pedo hunting” channels on Telegram that have attempted to show the horrors that lurk within these chatrooms. They tend to share CSAM, something that is every bit as illegal when done by those who believe they’re doing so righteously as when done by those who have produced the material.

Members of Tar’s group, however, took the fight directly to those within 764. They would try to get themselves into the good graces of predators by being useful—some would even edit the abuser's videos showing off their victims, one of a variety of “jobs.” These jobs are things that need to be done to keep the community sustainable, and include things like serving as a “librarian” who will host the content. Tar told VICE News that many of the sextortionists, children with large egos, would be relatively loose with their info inside the private areas.

“People get more comfortable and they speak more freely about their acts and things that they've done to children in the past or things that they want to do to children,” said Tar. “People will post images of themselves because they fly so close to the Sun that they want their fucking wings to melt.”

The group would attempt to collect information on the sextortionists and use it to either dox the abusers on a site known for hosting people’s data or actively extort them. Tar showed VICE screenshots showing that within the chats, the groomers were extremely aware that they were open to being targeted by law enforcement groups and would even share videos of themselves being raided. Tar believes that these groups are selling the content they create in this room where children are abused. “I would presume that that would be recorded and sold on the dark web,” they said.

Tar said he once saw a group of children get pulled into orbit in less than 10 minutes—he shared evidence of this with VICE News—by a young woman on TikTok who was initially a victim. Once in a group chat with the abuser, a graphic video of a child abusing themselves sexually was shared. All the children initially reacted in horror but were then told to share it with other people to shock them as they were.

"Yo, [the name of the groomer], I'm going to spread all your CP on Telegram," you can hear one of the people say. "I'm going to traumatize kids. Fuck their lives homie. Let's see who should we start with?"

According to Kriner this kind of activity—getting in too deep—makes it hard for both an abuser and victim to leave.

"Once you engage with that taboo. It's so far gone for many people that you can't just walk away as if it never happened. You sort of get stuck. Either you commit further to it, or you have to incur the costs of what you did. And those costs can be extraordinarily high."

Surviving an online hell

When Ali heard that Limkin was arrested, she couldn’t believe her ears.

"I was in shock, I was relieved I could not believe it,” she said. “I was just so shocked that something had actually been done because so many of the girls who report things that have happened to them online it does not carry through whatsoever.

“I called my mom right away and was like ‘Hey, like I guess whose finally in prison!’”

Ali is now working on healing after her experience within the group, but she’s wary of Limkin’s associates, who are still out there. She knows that while Cultist is still down, there are other servers active, and even the news about his arrest would drive users to this community, where they will fall into the horrific web of these sextortionists.

"I know a lot of them have deactivated their social media or Discord accounts because of this arrest, which makes me happy in a way, but also not, because now they can't be caught for what they've been doing if they have this time [to protect themselves]” said Ali.

‘You can get out of it’

She wants other young people to not implicitly trust someone because they’re younger.

“You don't think that a 17-year-old is going to be an awful pedophilic pervert or someone who could cause harm to you because you associate age with power a lot of the time. So I think it's really important to realize that even though they might be close to your age they are not normal people, and they do have the capacity to hurt you or to harm you, to extort you.”

Furthermore, she wants others who have been in her situation to know it’s not their fault. That you can escape the hold your abusers have on you and come out the other side. Those she was dealing with followed through on their threats and sent content of her to her parents and said the worst-case scenario was survivable.

“It's not your fault that they took the person you are and manipulated it to extort you,” she said. “You can get out of it. You can talk to the police. You can get protection if you're worried about them doing something to you or your family. A lot of it is empty threats to get you to continuously comply.

“There is a way out. Talk to someone. It’s better than constantly feeling trapped in a situation like that.”

If you or somebody you know are being targeted or abused by sextortionists you do have options. If you can’t reach out to a loved one or law enforcement you can reach out to the National Center for Missing & Exploited Children and/or use their Take It Down app to anonymously remove your images from most platforms.